I have written about this before, how SSMS generates restore commands that will fail. This post is about showing what it might look like using screenshots. If you always use T-SQL directly to do restore, then you won’t be affected by this. But if you expect to be able to perform restore using the restore dialog, then read on.

The problem

The issue is that SSMS base a restore sequence on backups that are impossible to restore from. There are two cases I have found:

Copy only backups

The purpose of the COPY_ONLY option for the backup command is to do an out-of-bands backup. I.e., you do an ad-hoc backup as a one-time thing, restore this on your test server and then delete the backup file. The copy only option is for you to say that you don’t want to affect your scheduled backups with this ad-hoc backup. We expect SSMS to not base the restore sequence on these backups – since it is likely that the backup file has been deleted.

Snapshot backups

If you perform a snapshot of a virtual machine (or “checkpoint” as Hyper-V calls it) then this snapshot might interact with VSS in Windows so you get a consistent state of your SQL Server databases. I.e., when you restore/apply such a snapshot, your databases should be just fine. This is great, as long as it doesn’t mess up the SQL Server backups that we produce. It won’t. But the restore GUI in SSMS can be messed up by such a snapshot.

The timeline dialog

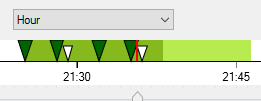

The problem seems to occur in the timeline dialog. In the restore GUI, there’s a nifty timeline dialog where you can use a slider to graphically specify at what point in time you want to restore the database to. The GUI uses the backup history information in msdb and based on that figures out what restore operations to perform. This is actually really nice and friendly. As long as it works. The problem is when you specify an earlier point in time, it sometimes uses the incorrect full backup – a full backup which isn’t possible to restore from.

Examples:

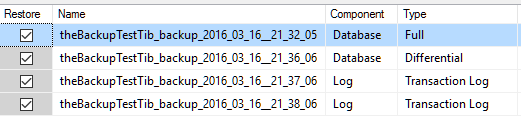

Above, the GUI correctly skips a copy only backup. A full copy only backup was produced after the full backup listed above, but the GUI is smart enough to not base the restore sequence on this copy only backup. This is how it should work.

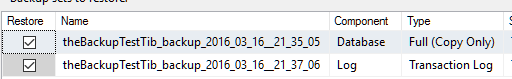

Above, the GUI incorrectly base the restore on a copy only backup. After using the timeline dialog to point to an earlier point in time, you can see that the GUI now has changed so it bases the restore on this potentially non-existing copy only backup. Not a nice situation to be in if the person doing the restore hasn’t practiced using the T-SQL RESTORE commands.

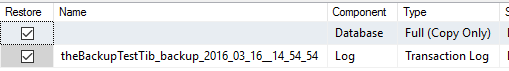

Above, the GUI correctly skips a snapshot backup. A snapshot backup using VSS was produced after the full backup listed above, but the GUI is smart enough to not base the restore sequence on this snapshot backup. This is how it should work.

![]()

Above, the GUI incorrectly base the restore on a snapshot backup. After using the timeline dialog to point to an earlier point in time, you can see that the GUI now has changed so it bases the restore on the snapshot backup. This is immensely bad since the snapshot doesn’t exist in SQL Server. It is just a placeholder so that SQL Server is aware that a snapshot was performed at that point in time. Look at the RESTORE command it produces!

You might wonder how to tell if something is producing VSS snapshots of your machine? You can see that in the errorlog file. Here are a few lines from the errorlog on a machine where I used Hyper-V to produce a proper VSS snapshot (edited for readability):

2016-03-16 13:30:23.75 I/O is frozen on database Adventureworks2014.

2016-03-16 13:30:23.75 I/O is frozen on database theBackupTestTib.

2016-03-16 13:30:23.75 I/O is frozen on database master.

2016-03-16 13:30:29.33 I/O was resumed on database master.

2016-03-16 13:30:29.33 I/O was resumed on database theBackupTestTib.

2016-03-16 13:30:29.34 I/O was resumed on database Adventureworks2014.

Bottom line

You already know this. Practice doing restores – as realistically as possible and using different scenarios.

Disclaimer: Things might change. The tests I did was using SSMS 2014 for the copy only backups and for SSMS 2016 CTP 3.3 for snapshot backups. I have seen this behaviour since SQL Server 2012, though. I wish that this will be fixed in a future version of SSMS, but considering that my requests has gone unnoticed before, I don’t have much hopes. But if you do see something different, let me know and I can re-test. Just make sure to add as much details as possible.

I used my stored procedure at found here to perform the backups – made the T-SQL used for these tests a bit easier to read:

--Show that restore based on backup history idn't possible

--is the most recent full backup is missing, even if COPY_ONLY was used.

--Create the BackupDbWithTs procedure first:

--http://www.karaszi.com/sqlserver/util_backup_script_like_MP.asp

--We will do full backups, diff backups and log backups.

--In between, we will also do a COPY_ONLY full backup (think of it as an ad-hos backup subsequentially deleted).

---------------------------------------------------------------------------------------------

--Drop and create the test database

--NOTE: This will drop a database named theBackupTestTib is such exists!!!!

---------------------------------------------------------------------------------------------

IF DB_ID('theBackupTestTib') IS NOT NULL

DROP DATABASE theBackupTestTib

GO

CREATE DATABASE theBackupTestTib

GO

--Set to full recovery

ALTER DATABASE theBackupTestTib SET RECOVERY FULL

GO

---------------------------------------------------------------------------------------------

--Create a table so we can make a modification in the database between each backup

---------------------------------------------------------------------------------------------

IF OBJECT_ID('theBackupTestTib.dbo.t') IS NOT NULL DROP TABLE theBackupTestTib.dbo.t

GO

CREATE TABLE theBackupTestTib.dbo.t(c1 INT IDENTITY, c2 CHAR(30) DEFAULT 'Hello backup world')

GO

---------------------------------------------------------------------------------------------

--Declare and set variables.

--Adjust to your liking.

DECLARE

@i INT = 1

,@time_in_between VARCHAR(20) = '00:01:00' --Time between each backup. 1 sec is fine to test thet restore GUI work.

--Set to higher if you want to use the timeline restore dialog and visually see your backups.

,@iterations INT = 2 --Number of iterations. Adjust as you wish, but do at least two.

,@db SYSNAME = 'theBackupTestTib'

,@path VARCHAR(200) = 'R:\' --Where to put your backup files. Delete backup files after you are finished.

WHILE @i <= @iterations

BEGIN

INSERT INTO theBackupTestTib.dbo.t DEFAULT VALUES;

EXEC MASTER..BackupDbWithTs @db, @path, 'FULL', 'N', 'N', 'N'; INSERT INTO theBackupTestTib.dbo.t DEFAULT VALUES; WAITFOR DELAY @time_in_between

EXEC MASTER..BackupDbWithTs @db, @path, 'LOG ', 'N', 'N', 'N'; INSERT INTO theBackupTestTib.dbo.t DEFAULT VALUES; WAITFOR DELAY @time_in_between

EXEC MASTER..BackupDbWithTs @db, @path, 'LOG ', 'N', 'N', 'N'; INSERT INTO theBackupTestTib.dbo.t DEFAULT VALUES; WAITFOR DELAY @time_in_between

--Perform the COPY_ONLY or snapshot backup now:

EXEC MASTER..BackupDbWithTs @db, @path, 'FULL', 'N', 'N', 'Y'; INSERT INTO theBackupTestTib.dbo.t DEFAULT VALUES; WAITFOR DELAY @time_in_between --COPY_ONLY

EXEC MASTER..BackupDbWithTs @db, @path, 'DIFF', 'N', 'N', 'N'; INSERT INTO theBackupTestTib.dbo.t DEFAULT VALUES; WAITFOR DELAY @time_in_between

EXEC MASTER..BackupDbWithTs @db, @path, 'LOG ', 'N', 'N', 'N'; INSERT INTO theBackupTestTib.dbo.t DEFAULT VALUES; WAITFOR DELAY @time_in_between

EXEC MASTER..BackupDbWithTs @db, @path, 'LOG ', 'N', 'N', 'N'; WAITFOR DELAY @time_in_between

SET @i = @i + 1

END